Unity 2019 added an API for DX12's RTX stuff. Nice, finally!

It's later! Unity 2020.1 now has skinned mesh renderer support! Time to play around a bit.

What's New?

There's a few new juicy additions to Unity's scripting API.

- RayTracingShader - a new special type of shader which is used to generate rays, for querying geometry intersections.

- RayTracingAccelerationStructure - a GPU memory representation of the geometry to ray trace against.

Getting Started

If you are starting from scratch, the very first thing you need to do is to generate a RayTracingAccelerationStructure. Here's a simple example to get started.

var settings = new RayTracingAccelerationStructure.RASSettings();

settings.layerMask = UpdateLayers;

settings.managementMode = RayTracingAccelerationStructure.ManagementMode.Automatic;

settings.rayTracingModeMask = RayTracingAccelerationStructure.RayTracingModeMask.Everything;

_AccelerationStructure = new RayTracingAccelerationStructure(settings);_AccelerationStructure.Update();

_AccelerationStructure.Build(); Once this has been setup, you're ready to generate rays!

Generating Rays

RTX rays are generated from a ray generation shader. If you've used Compute Shaders in Unity, things will look pretty familiar to you.

#include "HLSLSupport.cginc"

#include "UnityRaytracingMeshUtils.cginc"

#include "UnityShaderVariables.cginc"

RaytracingAccelerationStructure _RaytracingAccelerationStructure : register(t0);

float4x4 _CameraToWorld;

float4x4 _InverseProjection;

[shader("raygeneration")]

void MyRayGenerationShader()

{

uint3 dispatchId = DispatchRaysIndex();

uint3 dispatchDim = DispatchRaysDimensions();

// somehow get the world space position of the pixel we care about

// somehow generate a ray from that pixel in a meaningful Direction

// color the pixel we care about, with the information that ray gathered

}For the sake of example, we should create something meaningful. Let's dispatch rays from every pixel on the screen, which we will eventually get intersecting with geometry in the acceleration structure that we defined earlier.

Also, note that the RaytracingAccelerationStructure needs to be defined somewhere, too. t0 is the register that Unity uses for this structure.

float2 texcoord = (dispatchId.xy + float2(0.5, 0.5)) / float2(dispatchDim.x, dispatchDim.y);

float3 viewPosition = float3(texcoord * 2.0 - float2(1.0, 1.0), 0.0);float4 clip = float4(viewPosition.xyz, 1.0);

float4 viewPos = mul(_InverseProjection, clip);viewPos.xyz /= viewPos.w;float3 worldPos = mul(_CameraToWorld, viewPos);

float3 worldDirection = worldPos - _WorldSpaceCameraPos;Now that we know from where and to where we want to cast rays, let's start building a ray and it's payload. In the raytracing API provided to us, we need to build both a RayDesc and a custom payload struct, which are passed into a TraceRay() call.

RayDesc ray;

ray.Origin = _WorldSpaceCameraPos;

ray.Direction = worldDirection;

ray.TMin = 0;

ray.TMax = 10000;You can use any payload that you wish. Keep in mind that you may run into issues with high memory footprint payloads, so try to keep them as small as possible. In this example, we'll just use color.

struct MyPayload

{

float4 color;

};MyPayload payload;

payload.color = float4(0, 0, 0, 0);TraceRay(_RaytracingAccelerationStructure, 0, 0xFFFFFFF, 0, 1, 0, ray, payload);TraceRay() is another magic call we can do with the raytracing API. I highly recommend clicking on the previous link, to read up on some of the finer details. At the least, you'll need an acceleration structure to pass in, some flags for intersection rules, a 32 bit LayerMask flag, the RayDesc, and the custom payload.

After you've traced a ray, you need to do something with the payload result.

RWTexture2D<float4> RenderTarget;RenderTarget[dispatchId.xy] = payload.color;This traced ray may or may not intersect with geometry. If it does not, you'll probably want to provide a "miss" kernel.

[shader("miss")]

void MyMissShader(inout MyPayload payload : SV_RayPayload)

{

payload.color = 0;

}This miss kernel would be called if the ray does not hit anything, as the name implies.

Keep note of that inout keyword. For our purposes, you can assume it works similarly to the ref keyword in c# (although its a bit different. inout actually does a copy in and a copy out, its not a reference being passed around).

On the c# side, you'll need some component which can reference the ray generation shader, and then execute it.

// define a RT, to see whats going on

// note, if its public,

// you can double click it in the inspector to preview it,

// when the game view is focused

public RenderTexture _RenderTexture;

public RayTracingShader MyRayGenerationShader;I recommend using a CommandBuffer to dispatch the ray generation shader, but you can also just do it from the shader itself.

command.SetRayTracingTextureParam(MyRayGenerationShader, "RenderTarget", _RenderTexture); command.SetRayTracingShaderPass(MyRayGenerationShader, "MyRaytracingPass");

command.SetRayTracingAccelerationStructure(MyRayGenerationShader, "_RaytracingAccelerationStructure", _AccelerationStructure);var projection = GL.GetGPUProjectionMatrix(camera.projectionMatrix, false);

var inverseProjection = projection.inverse;

command.SetRayTracingMatrixParam(MyRayGenerationShader, "_InverseProjection", inverseProjection);

command.SetRayTracingMatrixParam(MyRayGenerationShader, "_CameraToWorld", camera.cameraToWorldMatrix);

command.SetRayTracingVectorParam(MyRayGenerationShader, "_WorldSpaceCameraPos", camera.transform.position);command.DispatchRays(MyRayGenerationShader, "MyRayGenerationShader", (uint)_texture.width, (uint)_texture.height, 1u, camera);Once this dispatch is called, you'll be generating rays! However, they won't do anything when they hit anything, yet.

Hit!

To do anything interesting, you'll need to provide a hit kernel. In Unity, you can do this in the .shader file of any shader by adding a Pass{} block. When you dispatch rays, you can use SetRayTracingShaderPass to specify a name, and when a ray intersects an object, Unity will check for any Passes that match that name.

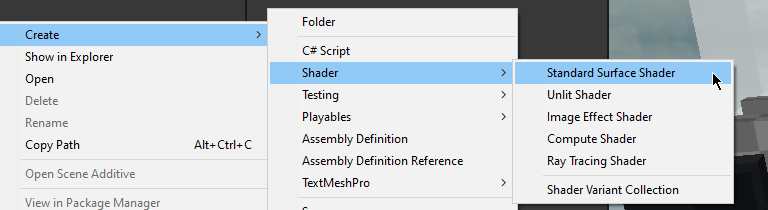

As an example, let's create a new shader.

Open it up, and add in something like this, after the SubShader block.

SubShader

{

Pass

{

Name "MyRaytraceShaderPass"

HLSLPROGRAM

#pragma raytracing MyHitShader

struct MyPayload

{

float4 color;

};

struct AttributeData

{

float2 barycentrics;

};

[shader("closesthit")]

void MyHitShader(inout MyPayload payload : SV_RayPayload,

AttributeData attributes : SV_IntersectionAttributes)

{

payload.color = 1;

}

ENDHLSL

}

}HLSL has some a new attribute: [shader("")]. You can define what type of raytracing shader this function is meant for. The most useful for us is "closesthit" - which is ran when the ray intersection nearest to the ray's origin is found.

Here is a list of available DXR shader types, with their appropriate method signatures.

[shader("raygeneration)]

void RayGeneration() {}

[shader("intersection")]

void Intersection() {}

[shader("miss")]

void Miss(inout MyPayload payload : SV_RayPayload) {}

[shader("closesthit")]

void ClosestHit(inout MyPayload payload : SV_RayPayload, MyAttributes attributes : SV_IntersectionAttributes) {}

[shader("anyhit")]

void AnyHit(inout MyPayload payload : SV_RayPayload, MyAttributes attributes : SV_IntersectionAttributes) {}

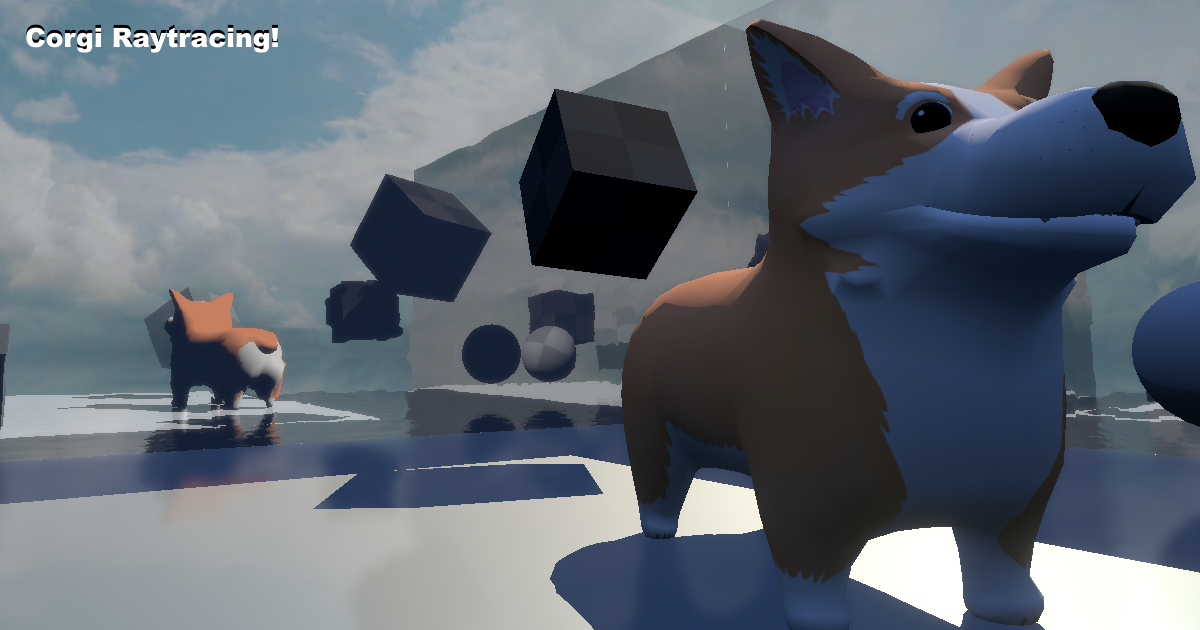

What will happen now, is our ray generation shader will generate black for any pixels that are not overlapping geometry, and white for any pixels that are generating geometry. We're raytracing!

What can we do with this?

The raytracing API that DX12 is providing us unlocks some great stuff.

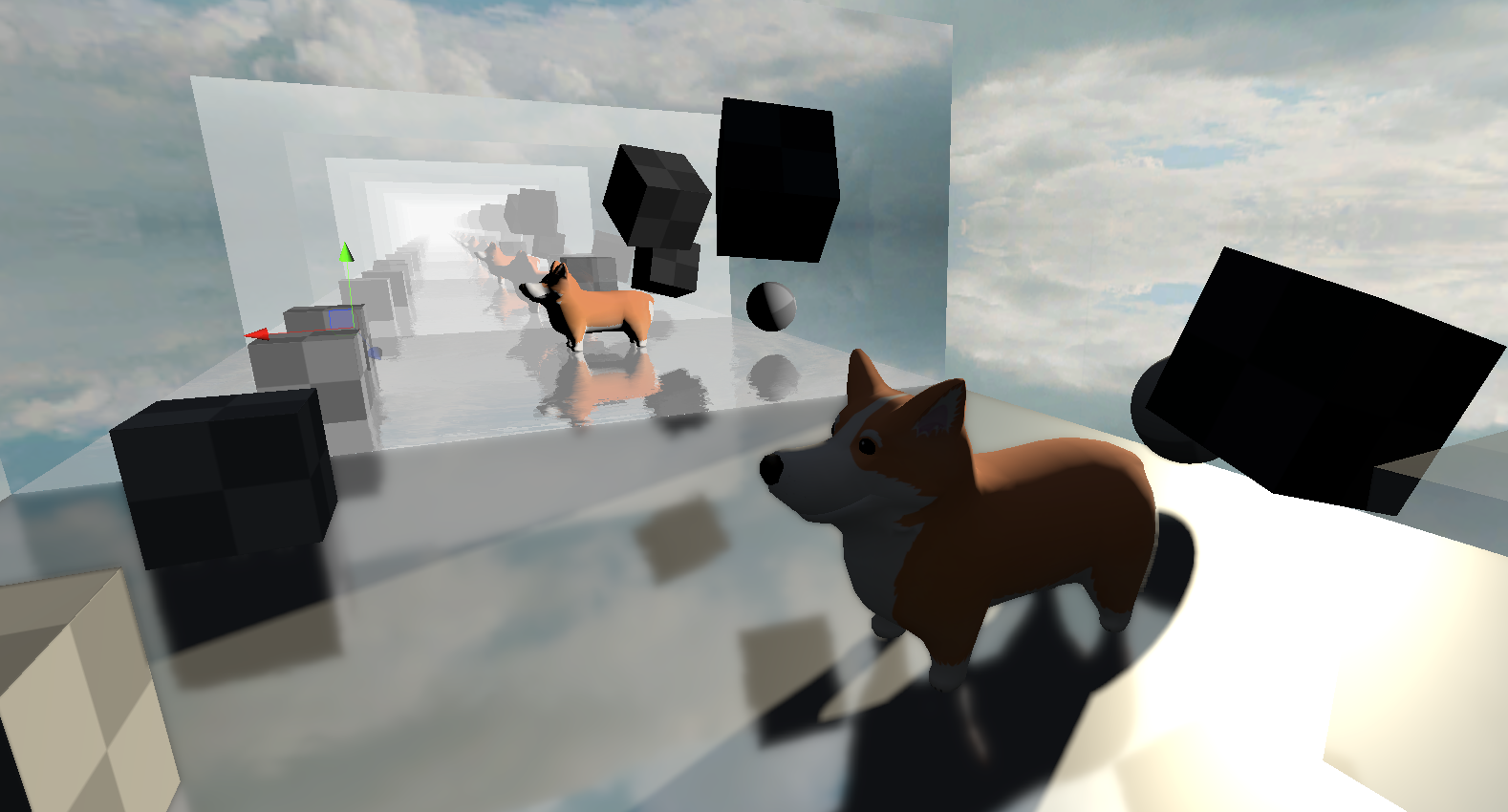

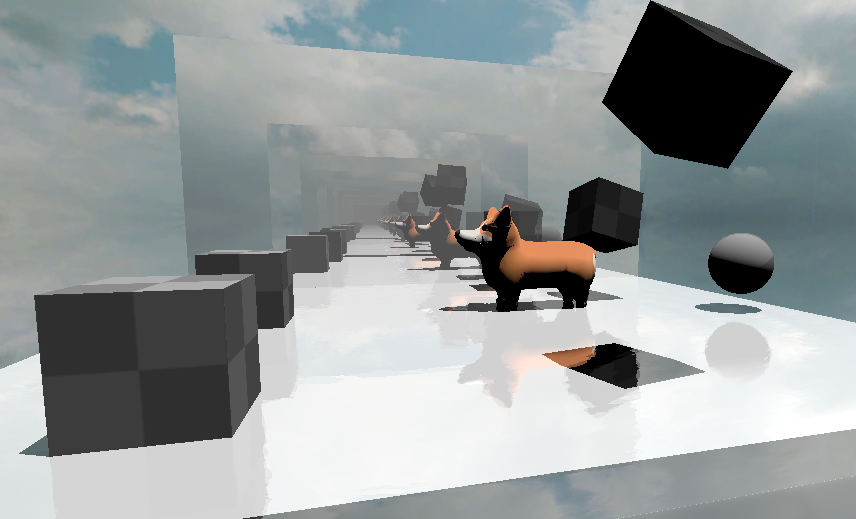

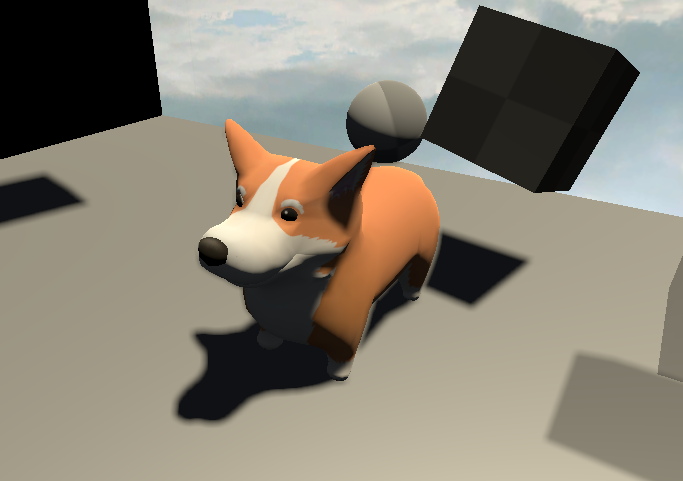

Here's a screenshot of some raytraced reflections I've thrown together.

This can be taken a step further, too. Why not have shadows in our reflections?

And if our reflections can have raytraced shadows.. why not replace unity's shadows completely with raytraced shadows?

its about time voxelgame got some #rtx support#gamedev #madewithunity pic.twitter.com/w2KuLFFcf0

— Coty Getzelman (@cotycrg) November 28, 2020

Getting Useful Data From Intersections

struct AttributeData

{

float2 barycentrics;

};Using a user defined struct, you can request some data from intersections. It's possible to use these barycentric coordinates provided to get useful interpolated data from the vertices. For example, you could use this to get the albedo of the triangle with proper uv mapping, or you could even shade the triangle like normal.

#include "UnityRaytracingMeshUtils"struct Vertex

{

float2 texcoord;

};uint primitiveIndex = PrimitiveIndex();uint3 triangleIndicies = UnityRayTracingFetchTriangleIndices(primitiveIndex);Vertex v0, v1, v2;v0.texcoord = UnityRayTracingFetchVertexAttribute2(triangleIndicies.x, kVertexAttributeTexCoord0);

v1.texcoord = UnityRayTracingFetchVertexAttribute2(triangleIndicies.y, kVertexAttributeTexCoord0);

v2.texcoord = UnityRayTracingFetchVertexAttribute2(triangleIndicies.z, kVertexAttributeTexCoord0);float3 barycentrics = float3(1.0 - attributeData.barycentrics.x - attributeData.barycentrics.y, attributeData.barycentrics.x, attributeData.barycentrics.y);Vertex vInterpolated;

vInterpolated.texcoord = v0.texcoord * barycentrics.x + v1.texcoord * barycentrics.y + v2.texcoord * barycentrics.z;From here, you can sample from textures in the normal way.

Texture2D<float4> _MainTex;

SamplerState sampler_MainTex;

[shader("closesthit")]

void MyHitShader(inout ExamplePayload payload : SV_RayPayload, AttributeData attributes : SV_IntersectionAttributes)

{

// get texcoord somehow

// then use below

payload.color = _MainTex.SampleLevel(sampler_MainTex, texcoord, 0);

}

Some useful data! You can expand this further by using TraceRay() inside of the closesthit shader passes. Keep in mind that you need to increase #pragma max_recursion_depth 1, when using TraceRay() inside of a hit function. You'll also need to manage your recursion manually. Keep this in mind, or you may crash!

Self Promo

If you just want to download some source code, I'm providing it all in the form of a Unity Asset Store package. The raytraced reflections and raytraced shadows are both packaged with it, of course. I've also included a lot of helpful macros, for quickly making your own raytracing shaders.

Further Reading

I highly recommend the following for further reading.